Guba and Lincoln’s work, including their (1994) Competing Paradigms in Qualitative Research, is now considered by many to be part of a necessary background for any discussion of (educational) research. I’ve been astonished by how many people who did MAs in TESOL and/or Applied Linguistics in the nineties and onwards were taught to regard Guba and Lincoln’s work as if it were part of the canon of the philosophy of science, rather than stuff which nobody in that field takes seriously, and which very few scientists have even heard of. Below is another attempt to set the record straight.

Research Paradigms

Following Guba and Lincon, Taylor and Medina (2013), explain that a “research paradigm” comprises

- a view of the nature of reality (i.e., ontology) – whether it is external or internal to the knower;

- a related view of the type of knowledge that can be generated and standards for justifying it (i.e., epistemology);

- and a disciplined approach to generating that knowledge (i.e., methodology).

However, scholars in scientific method and the philosopohy of science, including Khun, Popper, Lakatos, Fereyabend, and Laudan, for example, don’t discuss “Research Paradigms” in this way, because they all take a realist ontology and epistemology for granted. That is, they all assume that an external world exists independently of our perceptions of it; that it is possible to study different phenomena in this world through observation and reflection, to make meaningful statements about them, and to improve our knowledge of them. Furthermore, they all agree that scientific method requires hypotheses to be tested by means of empirical observation, logic and rational argument.

So what’s all this talk of “Research Paradigms” about? According to Taylor and Medina, the most “traditional” paradigm is positivism:

Positivism is a research paradigm that is very well known and well established in universities worldwide. This ‘scientific’ research paradigm strives to investigate, confirm and predict law-like patterns of behaviour, and is commonly used in graduate research to test theories or hypotheses.

Positivism

In fact, positivism refers to a particular form of empiricism, and is a philosophical view primarily concerned with the issue of reliable knowledge. Comte invented the term around 1830; Mach headed the second wave of positivism fifty years later, seeking to root out the “contradictory” religious elements in Comte’s work, and finally, the Vienna Circle in the 1920s (Schlick, Carnap, Godel, were key members; Russell, Whitehead and Wittgenstein were interested parties) developed a programme labelled “Logical Positivism”, which consisted of cleaning up language so as to get rid of paradoxes, and then limiting science to strictly empirical statements. Their efforts lasted less than a decade, and by the time the 2nd world war started, the movement had broken up in complete disarray.

It’s my own invention

When Guba & Lincoln – and now millions of others, it seems – use the term “positivist”, they’re using a definition which has nothing to do with the positivist movements of Comte, Mach, and Carnap, but is rather a politically-motivated caricature of “the scientist”. And the “positivist paradigm” refers to a set of beliefs, etc., which conctructivists like Lincoln and Guba want to attribute to the views of scientists in general. Positivism “strives to investigate, confirm and predict law-like patterns of behaviour”. Positivists work “in natural science, physical science and, to some extent, in the social sciences, especially where very large sample sizes are involved”. Positivism stresses “the objectivity of the research process”. It “mostly involves quantitative methodology, utilizing experimental methods”.

As opposed to positivism, we have various other paradigms, including post-positivism, the interpretive paradigm, and the critical paradigm. But the real alternative to the postivist paradigm is the postmodernist paradigm, or the constructivist paradigm as Lincoln and Guba prefer to call it.

The Strong Programme

We can trace Lincoln and Guba’s constructivism back to to the 1970s, when some of those working in the area of the sociology of science, taking inspiration from the “Strong Programme” developed by Barnes (1974) and Bloor (1976), changed their aim from the established one of analysing the social context in which scientists work to the far more radical, indeed audacious, one of explaining the content of scientific theories themselves. According to Barnes, Bloor and their followers, the content of scientific theories is socially determined, and there is no place whatsoever for the philosophy of science and all the epistemological problems that go with it. Since science is a social construction, it is the business of sociology to explain the social, political and ethical factors that determine why different theories are accepted or rejected.

An example of this approach in action is sociologist Ferguson’s explanation of the paradigm shift in physics which followed Einstein’s publication of his work on relativity.

The inner collapse of the bourgeois ego signalled an end to the fixity and systematic structure of the bourgeois cosmos. One privileged point of observation was replaced by a complex interaction of viewpoints.

The new relativistic viewpoint was not itself a product of scientific “advances”, but was part, rather, of a general cultural and social transformation which expressed itself in a variety of modern movements. It was no longer conceivable that nature could be reconstructed as a logical whole. The incompleteness, indeterminacy, and arbitrariness of the subject now reappeared in the natural world. Nature, that is, like personal existence, makes itself known only in fragmented images. (Ferguson, cited in Gross and Levitt, 1998: 46)

Here, Ferguson, in all apparent seriousness, suggests that Einstein’s relativity theory is to be understood not in terms of the development of a progressively more powerful theory of physics which offers an improved explanation of the phenomena in question, but rather in terms of the evolution of “bourgeois consciousness”.

Postmodernism

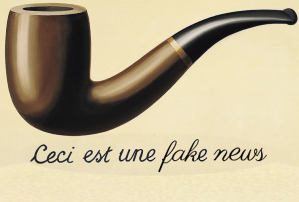

The basic argument of postmodernists is that if you believe something, then it is “real”, and thus scientific knowledge is not powerful because it is true; it is true because it is powerful. The question should not be “What is true?”, but rather “How did this version of what is believed to be true come to dominate in these particular social and historical circumstances?” Truth and knowledge are culturally specific. If we accept this argument, then we have come to the end of the modern project, and we are in a “post-modern” world.

Here are a few snippets from postmodernist texts (see Gross and Levitt, 1998, for references):

- Everything has already happened….nothing new can occur. There is no real world (Baudrillard, 1992: 64).

- Foucault’s study of power and its shifting patterns is a fundamental concept of postmodernism. Foucault is considered a post-modern theorist because his work upsets the conventional understanding of history as a chronology of inevitable facts and replaces it with underlayers of suppressed and unconscious knowledge in and throughout history. (Appignanesi, 1995: 45).

- sceptical post modernists look for substitutes for method because they argue we can never really know anything (Rosenau 1993: 117).

- Postmodern interpretation is introspective and anti-objectivist which is a form of individualized understanding. It is more a vision than data observation (Rosenau 1993: 119).

- There is no final meaning for any particular sign, no notion of unitary sense of text, no interpretation can be regarded as superior to any other (Latour 1988: 182).

Constructivism

Lincoln and Guba’s (1985) “constructivist paradigm” adopts an ontology & epistemology which is idealist (“what is real is a construction in the minds of individuals”) pluralist and relativist:

There are multiple, often conflicting, constructions and all (at least potentially) are meaningful. The question of which or whether constructions are true is sociohistorically relative (Lincoln and Guba, 1985: 85).

The observer cannot be neatly disentangled from the observed in the activity of inquiring into constructions. Constructions in turn are resident in the minds of individuals:

They do not exist outside of the persons who created and hold them; they are not part of some “objective” world that exists apart from their constructors (Lincoln and Guba, 1985: 143).

Thus constructivism is based on the principle of interaction.

The results of an enquiry are always shaped by the interaction of inquirer and inquired into which renders the distinction between ontology and epistemology obsolete: what can be known and the individual who comes to know it are fused into a coherent whole (Guba: 1990: 19).

Trying to explain how one might decide between rival constructions, Lincoln says:

Although all constructions must be considered meaningful, some are rightly labelled “malconstruction” because they are incomplete, simplistic, uninformed, internally inconsistent, or derived by an inadequate methodology. The judgement of whether a given construction is malformed can only be made with reference to the paradigm out of which the construction operates; in other words, criteria or standards are framework-specific, so, for instance, a religious construction can only be judged adequate or inadequate utilizing the particular theological paradigm from which it is derived (Lincoln, 1990: 144).

Discussion

There is in constructivism, as in postmodernism, an obvious attempt to throw off the blinkers of modernist rationality, in order to grasp a more complex, subjective reality. They feel that the modern project has failed, and I have some sympathy for that view. There is a great deal of injustice in the world, and there are good grounds for thinking that a ruling minority who benefit from the way economic activity is organised are responsible for manipulating information in general, and research programmes in particular, in extremely sophisticated ways, so as to bolster and increase their power and control. To the extent that postmodernists and constructivists feel that science and its discourse are riddled with a repressive ideology, and to the extent that they feel it necessary to develop their own language and discourse to combat that ideology, they are making a political statement, as they are when they say that “Theory conceals, distorts, and obfuscates, it is alienated, disparated, dissonant, it means to exclude, order, and control rival powers” (Culler, 1982: 67). They have every right to express such views, and it is surely a good idea to encourage people to scrutinise texts, to try to uncover their “hidden agendas”. Likewise the constructivist educational programme can be welcomed as an attempt to follow the tradition of humanistic liberal education.

The constructivists obviously have a point when they say (not that they said it first) that science is a social construct. Science is certainly a social institution, and scientists’ goals, their criteria, their decisions and achievements are historically and socially influenced. And all the terms that scientists use, like “test”, “hypothesis”, “findings”, etc., are invented and given meaning through social interaction. Of course. But – and here is the crux – this does not make the results of social interaction (in this case, a scientific theory) an arbitrary consequence of it. Popper, in reply to criticisms of his naïve falsification position, defends the idea of objective knowledge by arguing that it is precisely through the process of mutual criticism incorporated into the institution of science that the individual short-comings of its members are largely cancelled out.

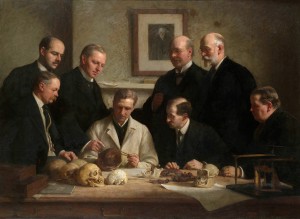

As Bunge (1996) points out “The only genuine social constructions are the exceedingly uncommon scientific forgeries committed by a team.” (Bunge, 1996: 104) Bunge gives the example of the Piltdown man that was “discovered” by two pranksters in 1912, authenticated by many experts, and unmasked as a fake in 1950. “According to the existence criterion of constructivism-relativism we should admit that the Piltdown man did exist – at least between 1912 and 1950 – just because the scientific community believed in it” (Bunge, 1996: 105).

The heart of the relativists’ confusion is the deliberate conflation of two separate issues: claims about the existence or non-existence of particular things, facts and events, and claims about how one arrives at beliefs and opinions. Whether or not the Piltdown man is a million years old is a question of fact. What the scientific community thought about the skull it examined in 1912 is also a question of fact. When we ask what led that community to believe in the hoax, we are looking for an explanation of a social phenomenon, and that is a separate issue. Just because for forty years the Piltdown man was supposed to be a million years old does not make him so, however interesting the fact that so many people believed it might be.

Guba and Lincoln say “There are multiple, often conflicting, constructions and all (at least potentially) are meaningful. The question of which or whether constructions are true is socio-historically relative”. This is a perfectly acceptable comment, as far as it goes. If Guba and Lincoln argue that the observer cannot be neatly disentangled from the observed in the activity of inquiry, then again the point can be well taken. But when they insist that constructions are exclusively in the minds of individuals, that “they do not exist outside of the persons who created and hold them; they are not part of some “objective” world that exists apart from their constructors”, and that “what can be known and the individual who comes to know it are fused into a coherent whole”, then they have disappeared into a Humpty Dumpty world where anything can mean whatever anybody wants it to mean.

A radically relativist epistemology rules out the possibility of data collection, of empirical tests, of any rational criterion for judging between rival explanations and I believe those doing research and building theories should have no truck with it. Solipsism and science – like solipsism and anything else of course – do not go well together. If the postmodernist paradigm rejects any understanding of time because “the modern understanding of time controls and measures individuals”, if they argue that no theory is more correct than any other, if they believe that “everything has already happened”, that “there is no real world”, that “we can never really know anything”, then I think they should continue their “game”, as they call it, in their own way, and let those of us who prefer to work with more rationalist assumptions get on with scientific research.

References

(Citations from Taylor & Medina, and Guba & Lincoln can be found in their articles which you can download from the links above.)

Barnes, B. (1974) Scientific knowledge and sociological theory. London: Routeledge and Kegan Paul.

Barnes, B. and Bloor, D. (1982) Relativism, Rationalism, and the Sociology of Science. In Hollis, M. and Lukes, S. Rationality and Relativism, 21-47. Oxford: Basil Blackwell.

Bloor, D. (1976) Science and Social Imagery. London: Routeledge and Kegan Hall.

Bunge, M. (1996) In Praise of Intolerance to Charlatanism in Academia. In Gross, R, Levitt, N., and Lewis, M. The Flight From Science and Reason. Annals of the New York Academy of Sciences, Vol. 777, 96-116.

Culler, J. (1982) On Deconstruction: Theory and Criticism after Structuralism. Ithaca: Cornell University Press.

Gross, P. and Levitt, N. (1998) Higher Superstition. Baltimore: John Hopkins University Press.

Lincoln, Y. S. and Guba, E.G. (1985) Naturalistic Enquiry. Beverly Hills, CA: Sage.

Latour, B. and Woolgar, S. (1979) Laboratory Life: The Social Construction of Scientific Facts. London: Sage.