(Note: This is a copy of a post from my CriticELT blog. I think it’s relevant to the subject of teacher trainers because, with the noteable exception of Scott Thornbury and Luke Meddings, leading teacher trainers, and TD SIGs, work on the assumption that modern coursebooks are an essential tool for current ELT practice.)

Introduction

Wilkins (1976) distinguished between 2 types of syllabus

1, a ‘synthetic’ syllabus: items of language are presented one by one in a linear sequence to the learner. The learner is expected to to build up, or ‘synthesize’, the knowledge incrementally,

2 an ‘analytic’ syllabus: the learner does the ‘analysis’, i.e. ‘works out’ the system, through engagement with natural language data.

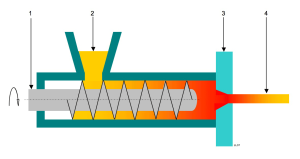

Coursebooks embody a synthetic approach to syllabus design. Coursebook writers take the target language (the L2) as the object of instruction, and they divide the language up into bits of one kind or another – words, collocations, grammar rules, sentence patterns, notions and functions, for example – which are presented and practiced in a sequence. The criteria for sequencing can be things like valency, criticality, frequency, or saliency, but the most common criterion is ‘level of difficulty’, which is intuitively defined by the writers themselves.

The approach is thus based on taking incremental steps towards proficiency; “items”, “entitities”, sliced up bits of the target language are somehow accumulated through a process of presentation, practice and re-cycling, and communicative competence is the result.

Characteristics

Different coursebooks claim to use different types of syllabus, – grammatical, lexical, or notional-functional, for example – deal with different topics, adopt different styles, and so and so; but, in the end, the vast majority of them use synthetic syllabuses with the same features described above, and they all give pride of place to explicit teaching and learning. The syllabus is delivered by the teacher, who first presents the bits of the L2 chosen by the coursebook writers (in written and spoken texts, grammar boxes, vocabulary lists, diagrams, pictures, and so on), and leads students through a series of activities aimed at practicing the language, like drills, written exercises, discussions, games, tasks and practice of the four skills.

Among the courseboooks currently on sale from UK and US publishers, and used around the world are the following:

Headway; English File; Network; Cutting Edge; Language Leader; English in Common; Speakout; Touchstone; Interchange; Mosaic; Inside Out; Outcomes.

Each of these titles consists of a series of five or six books aimed at different levels, from beginner to advanced, and offers a Student’s Book, a Teacher’s Book and a Workbook, plus other materials such as video and on-line resources. Each Student’s Book at each level is divided into a number of units, and each unit consists of a number of activities which teachers lead students through. The Student’s Book is designed to be used systematically from start to finish – not just dipped into wherever the teacher fancies. The different activities are designed to be done one after the other; so that Activity 1 leads into Activity 2, and so on. Two examples follow.

In New Headway, Pre-Intermediate, Unit 3, we see this progression of activities:

- Grammar (Past tense) leads into ( ->)

- Reading Text (Travel) ->

- Listening (based on reading text) ->

- Reading (Travel) ->

- Grammar – (Past tense) ->

- Pronunciation ->

- Listening (based on Pron. activity) ->

- Discussing Grammar –>

- Speaking (A game & News items) ->

- Listening & Speaking (News) ->

- Dictation (from listening) ->

- Project (News story) ->

- Reading and Speaking (About the news) ->

- Vocabulary (Adverbs) ->

- Listening (Adverbs) ->

- Grammar (Word order) ->

- Everyday English (Time expressions)

And if we look at Outcomes Intermediate, Unit 2, we see this:

- Vocab. (feelings) ->

- Grammar (be, feel, look, seem, sound + adj.) ->

- Listening (How do they feel?) ->

- Developing Conversations (Response expressions) ->

- Speaking (Talking about problems) ->

- Pronunciation (Rising & fallling stress) ->

- Conversation Practice (Good / bad news) ->

- Speaking (Physical greetings) ->

- Reading (The man who hugged) ->

- Vocabulary (Adj. Collocations) ->

- Grammar (ing and ed adjs.) ->

- Speaking (based on reading text) ->

- Grammar (Present tenses) ->

- Listening (Shopping) ->

- Grammar (Present cont.) ->

- Developing conversations (Excuses) ->

- Speaking (Ideas of heaven and hell).

All the other coursebooks mentioned are similar in that they consist of a number of units, each of them containing activities involving the presentation and practice of target versions of L2 structures, vocabulary, collocations, functions, etc., using the 4 skills. All of them assume that the teacher will lead students through each unit and do the succession of activities in the order that they’re set out. And all of them wrongly assume that if learners are exposed to selected bits of the L2 in this way, one bit at a time in a pre-determined sequence, then, after enough practice, the new bits, one by one, in the same sequence, will become part of the learners’ growing L2 competence. This false assumption flows from a skill-based view of second-language acquisition, which sees language learning as the same as learning any other skill, such as driving a car or playing the piano.

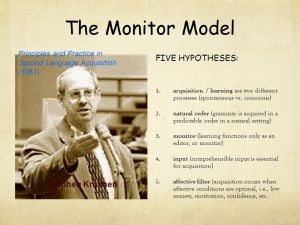

Skill-based theories of SLA

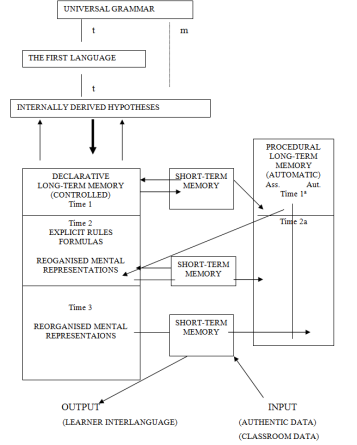

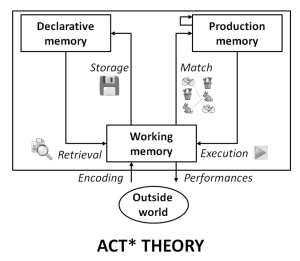

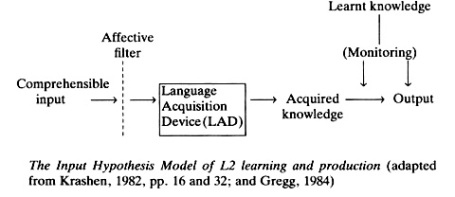

The most well-known of these theories is John Anderson’s (1983) ‘Adaptive Control of Thought’ model, which makes a distinction between declarative knowledge – conscious knowledge of facts; and procedural knowledge – unconscious knowledge of how an activity is done. When applied to second language learning, the model suggests that learners are first presented with information about the L2 (declarative knowledge ) and then, via practice, this is converted into unconscious knowledge of how to use the L2 (procedural knowledge). The learner moves from controlled to automatic processing, and through intensive linguistically focused rehearsal, achieves increasingly faster access to, and more fluent control over the L2 (see DeKeyser, 2007, for example).

The fact that nearly everybody successfully learns at least one language as a child without starting with declarative knowledge, and that millions of people learn additional languages without studying them (migrant workers, for example), might make one doubt that learning a language is the same as learning a skill such as driving a car. Furthermore, the phenomenon of L1 transfer doesn’t fit well with a skill based approach, and neither do putative critical periods for language learning. But the main reason for rejecting such an approach is that it contradicts SLA research findings related to interlanguage development.

Firstly, it doesn’t make sense to present grammatical constructions one by one in isolation because most of them are inextricably inter-related. As Long (2015) says:

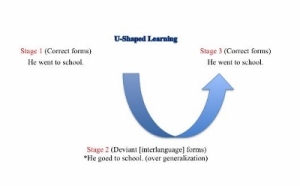

Producing English sentences with target-like negation, for example, requires control of word order, tense, and auxiliaries, in addition to knowing where the negator is placed. Learners cannot produce even simple utterances like “John didn’t buy the car” accurately without all of those. It is not surprising, therefore, that Interlanguage development of individual structures has very rarely been found to be sudden, categorical, or linear, with learners achieving native-like ability with structures one at a time, while making no progress with others. Interlanguage development just does not work like that. Accuracy in a given grammatical domain typically progresses in a zigzag fashion, with backsliding, occasional U-shaped behavior, over-suppliance and under-suppliance of target forms, flooding and bleeding of a grammatical domain (Huebner 1983), and considerable synchronic variation, volatility (Long 2003a), and diachronic variation.

Interlanguages

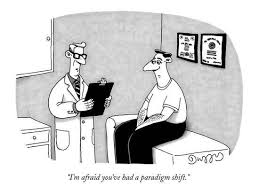

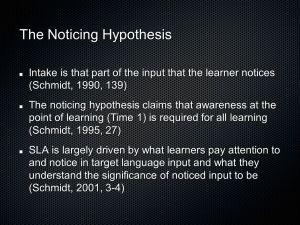

Secondly, research has shown that L2 learners follow their own developmental route, a series of interlocking linguistic systems called “interlanguages”. Myles (2013) states that the findings on the route of interlanguage (IL) development is one of the most well documented findings of SLA research of the past few decades. She asserts that the route is “highly systematic” and that it “remains largely independent of both the learner’s mother tongue and the context of learning (e.g. whether instructed in a classroom or acquired naturally by exposure)”. The claim that instruction can influence the rate but not the route of IL development is probably the most widely accepted claim among SLA scholars today.

Selinker (1972) introduced the construct of interlanguages to explain learners’ transitional versions of the L2. Studies show that interlanguages exhibit common patterns and features, and that learners pass through well-attested developmental sequences on their way to different end-state proficiency levels. Examples of such sequences are found in morpheme studies; the four-stage sequence for ESL negation; the six-stage sequence for English relative clauses; and the sequence of question formation in German (see Hong and Tarone, 2016, for a review). Regardless of the order or manner in which target-language structures are presented in coursebooks, learners analyse input and create their own interim grammars, slowly mastering the L2 in roughly the same manner and order. The acquisition sequences displayed in interlanguage development don’t reflect the sequences found in any of the coursebooks mentioned; on the contrary, they prove to be impervious to coursebooks, as they are to different classroom methodologies, or even whether learners attend classroom-based courses or not.

Note that interlanguage development refers not just to grammar; pronunciation, vocabulary, formulaic chunks, collocations, sentence patterns, are all part of the development process. To take just one example, U-shaped learning curves can be observed in learning the lexicon. Learners have to master the idiosyncratic nature of words, not just their canonical meaning. While learners encounter a word in a correct context, the word is not simply added to a static cognitive pile of vocabulary items. Instead, they experiment with the word, sometimes using it incorrectly, thus establishing where it works and where it doesn’t. Only by passing through a period of incorrectness, in which the lexicon is used in a variety of ways, can they climb back up the U-shaped curve.

Interlanguage development takes place in line with what Corder (1967) referred to as the internal “learner syllabus”, not the external syllabus embodied in coursebooks. Students don’t learn different bits of the L2 when and how a coursebook says that they should, but only when they are developmentally ready to do so. As Pienemann demonstrates (e.g. Pienemann, 1987) learnability (i.e., what learners can process at any one time), determines teachability (i.e., what can be taught at any one time). Coursebooks flout the learnability and teachability conditions; they don’t respect the learner’s internal learner syllabus.

False Assumptions made by Coursebooks

To summarise the above, we may list the 3 false assumptions made by coursebooks.

Assumption 1: In SLA, declarative knowledge converts to procedural knowledge. Wrong! No such simple conversion occurs. Knowing that the past tense of has is had and then doing some controlled practice, does not lead to fluent and correct use of had in real-time communication.

Assumption 2: SLA is a process of mastering, one by one, accumulating structural items. Wrong! All the items are inextricably inter-related. As Long (2015, 67) says:

The assumption that learners can move from zero knowledge to mastery of negation, the present tense, subject- verb agreement, conditionals, relative clauses, or whatever, one at a time, and move on to the next item in the list, is a fantasy.

Assumption 3: Learners learn what they’re taught when they’re taught it. Wrong – as every teacher knows! Pienemann (1987) has demonstrated that teachability is constrained by learnability.

‘Objections to Coursebooks

1. Using a coursebook means that most of classroom time is devoted to the teacher talking about the L2. In order to develop communicative competence, better results are obtained by most classroom time being devoted to students talking in the L2 about matters that are relevant to their needs.

2. Presenting and practicing a pre-set series of linguistic forms (pronunciation, grammar, notions, functions, lexical items, collocations, etc.) simply doesn’t work – it contradicts the robust results of SLA research into how people learn an L2. Even if a form coincidentally happens to be learnable (by some students in a class), and so teachable, at the time it is presented, teaching via PPP doesn’t ensure that it will be learned.

3. The approach is counterproductive: both teachers and students feel frustrated by the constant mismatch between teaching and learning.

4. The cutting up of language into manageable pieces (or “McNuggets” as Thornbury calls them) usually results in impoverished input and output opportunities.

5. Results are poor. It’s hard to get reliable data on this, but evidence strongly suggests that most students who do coursebook-driven courses do not achieve the level of proficiency they expected.

6. Both the content and methodology of the course are externally pre-determined and imposed. This point will be developed below.

7. Coursebooks pervade the ELT industry and stunt the growth of innovation and teacher training. The publishing companies that produce coursebooks also produce exams, teacher training courses and everything else connected to ELT. Publishing companies spend tens of millions of dollars on marketing, aimed at persuading stakeholders that coursebooks represent the best practical way to manage ELT. In the powerful British ELT establishment, key players like the British Council and Cambridge Assessment have huge influence on teacher training and language testing and they all accept the coursebook as central to ELT practice. TESOL and IATEFL, bodies that are supposed to represent teachers’ interests, have also succumbed to the influence of the big publishers, as their annual conferences make clear. So the coursebook rules, at the expense of teachers, of good educational practice, and of language learners.

8. Coursebooks represent the commofification of ELT. Grammar, vocabulary, lexical chunks, discourse, the whole messy chaotic stuff of language is neatly packaged into items, granules, chunks, seved up in sanitised short texts and summarised in lists and tables. Communicative competence itself, as Leung (cited in Thornbury 2014) points out, is turned into “inert and decomposed knowledge”, and language teaching is increasingly prepackaged and delivered as if it were a standardised, marketable product. ELT becomes just another market transaction; in this case between de-skilled teachers, who pass on a set of standardised, testable knowledge and skills to learners, who have been reconfigured as consumers.

Brian Tomlinson’s Reviews

I’ve written a post on Brian Tomlinson’s reviews of coursebooks, but here let me just mention what he and co-author Masuhara said in their most recent review of coursebooks, including Headway and Outlooks. Tomlinson and Masuhara found that none of the coursebooks was likely to be effective in facilitating long-term acquisition. They described the texts found in the books as short, contrived, inauthentic, mundane, decontextualised, unappealing, uninteresting, dull. They described the activities as unchallenging, unimiginative, unstimulating, mechanical, superficial.

Referring to the New Headway and Outcomes Intermediate Students books, they say, ”the focus is on explicit learning of language rather than engagement”. Students are led through a course which consists largely of teachers presenting and practicing bits of the language in such a way that only shallow processing is required, and, as a result, only short term memory is engaged. There are very few opportunities for cognitive engagement; most of the time, teachers talk about the language, and students are asked to read or listen to short, artificial, unchallenging texts devised to illustrate language points. When they are not being told about this or that aspect of the language,students are being led through a succession of frequently mechanical linguistic decoding and encoding activities which are unlikely to have any permanent effects on interlanguage development.

In their concluding remarks, the authors say that the explanation for these unsatisfactory results is simple: publishers‘ interests prevailed. Publishers like profit; they’re risk averse and have no interest in any radical reform of a model that has endured for over thirty years. They choose to give priority to face validity and the achievement of “instant progress”, rather than to helping learners towards the eventual achievement of communicative competence.

An Alternative: The Analytic or Process Syllabus

An analytic syllabus rejects the method of cutting up a language into manageable pieces, and instead organises the syllabus according to the needs of the learners and the kinds of language performance that are necessary to meet those needs. “Analytic” refers not to what the syllabus designer does, but to what learners are invited to do. Grammar isn’t “taught” as such; rather learners are provided with opportunities to engage in meaningful communication on the assumption that they will slowly analyse and induce language rules, by exposure to the language and by the teacher providing scaffolding, feedback, and information about the language.

Breen’s (1987) distinction between product and process syllabuses contrasts the focus on content and the pre-specification of linguistic or skill objectives, with a “natural growth” approach which aims to expose the learners to to real-life communication without any pre-selection or arrangement of items. Figure 1, below, summarises the differences.

A process approach focuses on how the language is to be learned. There is no pre-selection or arrangement of items; the syllabus is negotiated between learners and teacher as joint decision makers, and emphasises the process of learning rather than the subject matter. No coursebook is used. The teacher implements the evolving syllabus in consultation with the students who participate in decision-making about course objectives, content, activities and assessment.

Discussion

Hugh Dellar has made a number of attempts to defend coursebooks, and here are some examples of what he’s said:

- “Attempts to talk about coursebook use as one unified thing that we all understand and recognise are incredibly myopic. Coursebooks differ greatly in terms of the way they frame the world and in terms of the questions and positions they expect or allow students to take towards these representations. …. So hopefully it’s clear that far from being one homogenous unified mass of media, coursebooks are wildly heterogeneous in both their world views and their presentations of language.”

- “Teachers mediate coursebooks”.

- “The kind of broad brush smearing of coursebooks you’re engaging in does those teachers a profound disservice as it’s essentially denying the possibility of them still being excellent practitioners. I’d also suggest that grammar DOES still seem to be the primary – though not the only – thing that the vast majority of teachers around the world expect and demand from material, whether you like it or not (and I don’t, personally, but there you go. We live in an imperfect world). To pretend this isn’t the case or to denigrate all those who believe this is wipe out a huge swathe of the teaching profession and preach mainly to the converted.”

- Teachers in very poor parts of the world would just love to have coursebooks.

- Coursebooks are based on the presentation and practice of discrete bits of grammar because that’s what teachers want.

- Coursebooks help teachers do their jobs.

- Coursebooks save time on lesson preparation.

- Coursebooks meet student and parental expectations.

These remarks are echoed by others (e.g. Harmer, Scrivener, Prodromou, Ur, Lansford, Walter), and can be summed up by the following:

- Coursebooks are not all the same.

- Teachers adapt, modify and supplement them.

- They’re convenient.

- They give continuity and direction to a language course.

I accept that some coursebooks don’t follow the synthetic syllabus I describe, but these are the exceptions. All the coursebooks I list at the start of this article, and I’d say those that make up 90% of the total sales of coursebooks worldwide, use a synthetic syllabus and make the 3 assumptions I suggest, including Dellar’s. All the stuff about coursebooks differing greatly “in terms of the way they frame the world and in terms of the questions and positions they expect or allow students to take towards these representations” has absolutely no relevance to the arguments made against them.

As for teachers adapting, modifying and supplementing coursebooks, the question is to what extent they do so. If they do so to a great extent, then the coursebook no longer serves as the syllabus, but they’ve rather contradicted the main point of having a coursebook, and one wonders how they can justify getting their students to buy the book if it’s only used let’s say 30% of the time. If they only modify and supplement to a small extent, then the coursebook drives the course, learners are led through a pre-determined series of steps, and my argument applies. The most important thing to note is that what teachers actually do is ameliorate coursebooks; they make them less terrible, more bearable, in dozens of different clever and inventive ways. But this, of course, is no argument in favour of the coursebook itself; indeed, to the extent that students learn, it will be more despite than because of the damn coursebook.

Which brings us to the claim that the coursebook is convenient, time-saving, etc.. Even if it’s true (which it won’t be if you spend lots of time adapting, modifying and supplementing (i.e. ameliorating) it), the trouble is, it doesn’t work: students don’t learn what they’re taught. And that applies to the other arguments used to defend coursebooks, such as that parents expect their kids to use them, that they give direction to the course, and so on: such arguments simply ignore the evidence that students do not, indeed cannot, learn in the way assumed by a coursebook.

Thus, the points above fail to address the main criticisms levelled against coursebooks, which are that they fly in the face of robust research findings and that they deprive teachers and learners of control of the learning process, leading to a lose-lose classroom environment. In order to reply to these arguments, those wishing to defend coursebooks must first confront the three false assumptions on which coursebook use is based (i.e. they must confront the evidence of how SLA actually happens) and they must then argue the case for dictating what is learned. That coursebooks are the dream of teachers working in Ethiopia; that coursebooks are cherished by millions of teachers who just really love them; that the Headway team have succeeded in keeping their products fresh and lively; that Outcome includes recordings of people who don’t have RP accents; that coursebooks are mediated by teachers; that coursebooks are here to stay, so get real and get used to it; none of these statements does anything to answer the case against them, and none carries any weight for those who wish to base their teaching practice on critical thinking and rational argument. No matter how “different” coursebooks are, or how flexibly they can be used, coursebooks rely on false assumptions about L2 learning, and impose a syllabus on learners who are largely excluded from decisions about what and how they learn.

Managing a process syllabus is no more difficult than mastering the complexities of a modern coursebook. All you need to get started is a materials bank and a crystal-clear explanation of roles and procedures. Part 2 of Breen 1987 provides a framework; the collection of articles edited by Breen (2000) has at least 5 really helpful “road maps”; Meddings and Thornbury (2009) give a detailed account of their approach in this excellent book; and I outline a process syllabus on my blog. As befits an approach based on libertarian, co-operative educational principles, a process syllabus is best seen in local rather than global settings. If the managers of local ELT centres have the will to break the grip of the coursebook, they only have to make a small initial investment in local training and materials, and to then support teachers in their efforts to involve their students in the new venture. I dare to say that such efforts will transform the learning experience of everybody involved.

Conclusion

Coursebooks oblige teachers to work within a framework where students are presented with and then practice dislocated bits of English in a sequence which is pre-determined and externally imposed on them by coursebook writers. Most teachers have little say in the syllabus design which shapes their work, and their students have even less say in what and how they’re taught. Furthermore, results of coursebook-based teaching are bad; most learners don’t reach the level they aim for, and most don’t reach the level of proficiency the coursebook promises (English Proficiency Index, 2015). At the same time, alternatives to coursebook-driven ELT which are much more attuned to what we know about psycholinguistic, cognitive, and socio-educational principles for good language teaching don’t get the exposure or the fair critical evaluation that they deserve.

Despite flying in the face of what we know about L2 learning, despite denying teachers and learners a decision-making voice, and despite poor results, the coursebook dominates current ELT practice to an alarming extent. The main pillars of the ELT establishment, from teacher organisations like TESOL and IATEFL, through bodies like the British Council, examination boards like Cambridge English Language Assessment and TEFL, to the teacher training certification bodies like Cambridge and Trinity, all support the use of coursebooks.

The increasing domination of coursebooks in a global ELT industry worth close to $200 billion (Pearson, 2016) means that they’re not just a symptom but a major cause of the current lose-lose situation we find ourselves in, where both teachers and learners are restrained and restricted by the demonstrably faulty methodological principles which coursebooks embody. I think we have a responsibility to raise awareness of the damage that coursebooks are doing, and to fight against the suffocating effects of continued coursebook consumption.

References

Anderson, J. R. (1983). The architecture of cognition. Cambridge, MA: Harvard University Press.

Breen, M.P. (1987) Contemporary Paradigms in Syllabus Design. Part I. Language Teaching, 20, pp 81-92.

Breen, M.P. (1987) Contemporary Paradigms in Syllabus Design. Part II. Language Teaching, 20, 20, Issue 03.

Breen, M.P. and Littlejohn, A. (2000) Classroom Decision Making: Negotiation and Process Syllabuses in Practice. Cambridge: CUP.

English Proficiency Index (2015) Accessed from http://www.ef.edu/epi/ 9th November, 2015

Hong, Z. and Tarone, E. (Eds.) (2016) Interlanguage Forty years later. Amsterdam, Benjamins.

Long, M.H. (2011) “Language Teaching”. In Doughty, C. and Long, M. Handbook of Language Teaching. NY Routledge.

Long, M.H. (2015) SLA and Task Based Language Teaching. N.Y., Routledge.

Long, M.H. & Crookes, G. (1993). Units of analysis in syllabus design: the case for the task. In G. Crookes & S.M. Gass (Eds.). Tasks in a Pedagogical Context. Cleveland, UK: Multilingual Matters. 9-44.

Meddings, L. And Thornbury, S. (2009) Teaching Unplugged. Delta.

Mitchell, R. and Myles, F. (2004) Second Language Learning Theories. London: Arnold.

Myles, F. (2013): Theoretical approaches to second language acquisition research. In Herschensohn, J. & Young-Scholten, M. (Eds.) The Cambridge Handbook of Second Language Acquisition. CUP

Ortega, L. (2009) Sequences and Processes in Language Learning. In Long and Doughty Handbook of Language Teaching. Oxford, Wiley.

Pearson (2016) GSE Global Report Retrieved from https://www.english.com/blog/global-framework-raising-standards 5/12/2016.

Pienemann, M. (1987) Psychological constraints on the teachability of languages. In C. Pfaff (Ed.) First and Second Language Acquisition Processes. Rowley, MA: Newbury House. 143-168.

Rea-Dickins, P. M. (2001) Mirror, mirror on the wall: identifying processes of classroom assessment. Language Testing 18 (4), p. 429 – 462.

Selinker, L. (1972) Interlanguage. International Review of Applied Linguistics 10, 209-231.

Statista (2015) Publisher sales of ELT books in the United Kingdom from 2009 to 2013. Accessed from http://www.statista.com/statistics/306985/total-publisher-sales-of-elt-books-in-the-uk/ 9th November, 2015.

Thornbury, S. (2014) Who ordered the Mcnuggets? Accessed from http://eltjam.com/who-ordered-the-mcnuggets/ 9th November, 2015.

Walkley, A. And Dellar, H. (2015) Outcomes:Intermediate. National Geographics.

Wilkins, D. (1976) Notional Syllabuses: A Taxonomy and its Relevance to Foreign Language Curriculum Development. London: Oxford University Press.