Corder’s (1967) paper is often given as the starting point for SLA discussion of input. It included the famous claim:

The simple fact of presenting a certain linguistic form to a learner in the classroom does not necessarily qualify it for the status of input, for the reason that input is “what goes in” not what is available for going in, and we may reasonably suppose that it is the learner who controls this input, or more properly his intake (p. 165).

Corder here suggests that SLA is a process of learner-controlled development of interlanguages, “interlanguages” referring to learner grammars, their evolving knowledge of the language. This marks a shift in the way SLA researchers perceived input. No longer a strictly external phenomenon, input is now the interface between the external stimuli and learners’ internal systems. Input is potential intake, and intake is what learners use for IL development; but it remains unclear what mechanisms and sub processes are responsible for the input-to-intake conversion. We can start with Krashen.

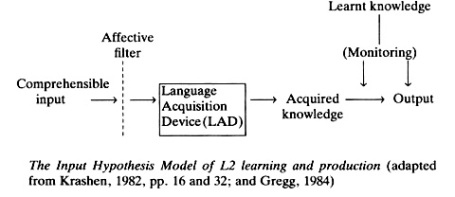

Krashen’s Input Model

Here, comprehensible input is the same as intake. It contains mostly language the learner already knows, but also unknown elements, including some that correspond to the next immediate step along the interlanguage development continuum. This comprehensible input has to get through the affective filter and is then processed by a special language processor which Krashen says is the same as Chomsky’s LAD. Thanks to this processor, some of the new elements in the input are subconsciously acquired and become part of the learner’s interlanguage. A completely different part of the mind processes a different kind of knowledge which is learned by paying conscious attention to what teachers and books and people tell the learner about the language. This conscious knowledge can be used to monitor and change output. It’s referred to in the top right part of the diagram. Just by the way, Hulstijn (2013) points out that nearly 30 years after Krashen made his much-criticised acquisition / learning distinction, cognitive neuro-scientists now agree that declarative, factual knowledge (Krashen’s ‘learned knowledge’) is stored in the medial temporal lobe (in particular in the hippocampus), whereas procedural, relatively unconscious knowledge (Krashen’s ‘acquired knowledge’) is stored and processed in various (mainly frontal) regions of the cortex.

We’ve already seen a number of objections to Krashen’s Theory as a theory, but the important thing here is to see how he relies on the LAD (plus a monitor) to explain how we learn an L2. The theory thus leans heavily on Chomsky’s explanation of L1 acquisition and says that L2 acquisition is more or less the same – all we need to learn a language is comprehensible input, because we’re hard wired with a device that allows us to make enough sense of enough of the input to slowly work out the system for ourselves.

Black boxes: the Processors

All theories of SLA – even usage-based theories – assume that there are some parts of the mind (or brain for the strict empiricists) involved in processing stimuli from the environment. The LAD is simply one attempt to describe what the processor does; namely provide rules for making sense of the input. The rules, which Chomsky describes in successive formulations of UG ( best understood, I think in terms of the principles and parameters model) help young children to map form to meaning. O’Grady gives the example of the rules which help the child make sense of information about the type of meaning most often associated with particular word classes.

“For example, the acquisition device might “tell” children that words referring to concrete things must be nouns. So language learners would know right away that words like dog, boy, house, and tree belong to that word class. This might just be enough to get started. Once children knew what some nouns looked like, they could start noticing other things on their own – like the fact that items in the noun class can occur with locator words like this and that, that they can take the plural ending, that they can be used as subjects and direct objects, that they are usually stressed, and so on.

Nouns with locator words: That dog looks tired. This house is ours.

Nouns with the plural ending: Cats make me sneeze. I like cookies.

Nouns used as subject or direct object: Dogs chase cats. A man painted our house.

Information of this sort can then be used to deal with words like idea and attitude, which cannot be classified on the basis of their meaning. (They are nouns, but they don’t refer to concrete things.) Sooner or later a child will hear these words used with this or that, or with a plural, or in a subject position. If she’s learned that these are the signs of nounhood, it’ll be easy to recognize nouns that don’t refer to concrete things. If all of this is on the right track, then the procedure for identifying words belonging to the noun class would go something like this. (Similar procedures exist for verbs, adjectives, and other categories.)

What the acquisition device tells the child: If a word refers to a concrete object, it’s a noun.

What the child then notices: Noun words can also occur with this and that; they can be pluralized; they can be used as subjects and direct objects.

What the child can then do: Identify less typical nouns (idea, attitude, etc.) based on how they are used in sentences.

This whole process is sometimes called bootstrapping. The basic idea is that the acquisition device gives the child a little bit of information to get started (e.g., a language must distinguish between nouns and verbs; if a word refers to a concrete object, it’s a noun) and then leaves her to pull herself up the rest of the way by these bootstraps.”

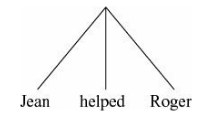

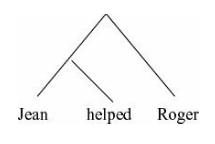

Of course, the rules that govern how the words are put together are also needed. O’Grady calls this “a blueprint”. In a sentence like Jean helped Roger the three words combine – but how?

“Does the verb combine directly with the two nouns?

Or does it combine first with its subject, forming a larger building block that then combines with the direct object?

Or does it perhaps combine first with its direct object, creating a building block that then combines with the subject?

How could a child possibly figure out which of these design options is right? For that matter, how could an adult? Once again, the acquisition device must come to the rescue by providing the following vital bits of information:

- Words are grouped into pairs.

- Subjects (doers) are higher than direct objects (undergoers).

With this information in hand, it’s easy for children to build sentences with the right design” (O’Grady,

So that’s one view of the “black box”, the language processor. It is, in the view of many scholars working on SLA, the best explanation so far of how children acquire linguistic knowledge, and of how they know things about the language which are not present in the input – it answers the poverty of the stimulus question. The LAD offers an innate system of grammatical categories and principles which define language, confine how language can vary and change, and explain how children learn language so successfully. And, using a few additional assumptions, it can can explain SLA and why most people find it so challenging, too.

VanPatten’s Input Processing Theory

VanPatten sees things slightly differently. His Input Processing (IP) theory is concerned with how learners derive intake from input, where intake is defined as the linguistic data actually processed from the input and held in working memory for further processing.

As such, IP attempts to explain how learners get form from input and how they parse sentences during the act of comprehension while their primary attention is on meaning. VanPatten’s model consists of a set of principles that interact in working memory, and takes account of the fact that working memory has very limited processing capacity. Content lexical items are searched out first since words are the principal source of referential meaning. When content lexical items and a grammatical form both encode the same meaning and when both are present in an utterance, learners attend to the lexical item, not the grammatical form. Here are VanPatten’s Principles of Input Processing:

P1. Learners process input for meaning before they process it for form.

P1a. Learners process content words in the input first. P1b. Learners prefer processing lexical items to grammatical items (e.g., morphology) for the same semantic information.

P1c. Learners prefer processing “more meaningful” morphology before “less” or “nonmeaningful” morphology.

P2. For learners to process form that is not meaningful, they must be able to process informational or communicative content at no (or little) cost to attention.

P3. Learners possess a default strategy that assigns the role of agent (or subject) to the first noun (phrase) they encounter in a sentence/utterance. This is called the first-noun strategy.

P3a. The first-noun strategy may be overridden by lexical semantics and event probabilities.

P3b. Learners will adopt other processing strategies for grammatical role assignment only after their developing system has incorporated other cues (e.g., case marking, acoustic stress).

P4. Learners process elements in sentence/utterance initial position first.

P4a. Learners process elements in final position before elements in medial position.

Perhaps the most important construct in the IP model is “Communicative value”: the more a form has communicative value, the more likely it is to get processed and made available in the intake data for acquisition, and it’s thus the forms with no or little communicative value which are least likely to get processed and, without help, may never get acquired. Notice that this account, like Pienemann’s discussed in Part 5, and indeed like VanPatten’s and O’Grady’s (see below), explains the input processing in terms of rational decisions taken on the basis of making the best use of relatively scarce processing resources.

I’m zooming through these theories without doing any of them real justice, and I apologise to all the scholars’ work that’s getting such brief treatment, but I hope that both a picture of the various architectures proposed, and the story of how SLA theories progressed, can be got from all this. Before we go on, I can’t resist quoting what VanPatten says at the end of one his books:

1. If you teach communicatively, you’d better have a working definition of communication. My argument for this is that you cannot evaluate what is communicative and what is appropriate for the classroom unless you have such a definition.

2. Language is too abstract and complex to teach and learn explicitly. That is, language must be handled in the classroom differently from other subject matter (e.g., history, science, sociology) if the goal is communicative ability. This has profound consequences for how we organize language-teaching materials and approach the classroom.

3. Acquisition is severely constrained by internal (and external) factors. Many teachers labor under the old present + practice + test model. But the research is clear on how acquisition happens. So, understanding something about acquisition pushes the teacher to question the prevailing model of language instruction.

4. Instructors and materials should provide student learners with level-appropriate input and interaction. This principle falls out of the previous one. Since the role of input often gets lip service in language teaching, I hope to give the reader some ideas about moving input from “technique” to the center of the curriculum.

5. Tasks (and not Exercises or Activities) should form the backbone of the curriculum. Again, language teaching is dominated by the present + practice + test model. One reason is that teachers do not understand what their options are, what is truly “communicative” in terms of activities in class, and how to alternatively assess. So, this principle is crucial for teachers to move toward contemporary language instruction.

6. A focus on form should be input-oriented and meaning-based. Teachers are overly preoccupied with teaching and testing grammar. So are textbooks. Students are thus overly preoccupied with the learning of grammar.

O’Grady (How Children learn language is the best book you’ll ever read on the subject) offers a different view. He proposes a ‘general nativist’ theory of first and second language acquisition where a modular acquisition device that does not include Universal Grammar is described. O’Grady sees his work as forming part of the emergentist rubric, but obviously, since he sees the acquisition device as a modular part of mind, he’s a long way from the real empiricists in the emergentist camp. Interestingly, for us, O’Grady accepts that there are sensitive periods involved in language learning, and that problems adults face in L2 acquisition can be explained by the fact that adults have only partial access to the (non-UG) L1 acquisition device.

O’Grady describes a different kind of processor, doing more general things, but it’s still a language processor and it’s still working not just on segments of voice streams, and words, but on syntax, and thus still seeing language as an abstract system governed by rules of syntax. When it comes to the more empiricist type of emergentist – Bates and MacWhinney’s Competition Model, for example – then here the talk is of a very general kind of processor doing the work, and this processor works almost exclusively on words and their meanings. Which brings us to the rub, so to speak.

As O’Grady argues so forcefully, the real disagreement between nativists and the emergentists who, unlike O’Grady, adopt a more or less empiricist epistemology, is that they can’t agree on what syntactic categories and structures are like. The dispute over the nature of how input gets processed is really a dispute about the nature of language. If you see language as a highly complex formal system best described by abstract rules that have no counterparts in other areas of cognition (O’Grady gives the requirement that sentences have a binary branching syntactic structure as one example of such a “rule”), then you see the processor, the acquisition device, as designed specifically for language. But if you see language in terms of its communicative function, then, since communication involves different types of considerations (O’Grady gives the examples of new versus old information, point of view, the status of speaker and addressee, the situation) then you’ll see the processor, as a multipurpose acquisition device working on very simple data. In my opinion, such a view actually fails to explain either language or the acquisition process, but we’ll come to that. For now, I’m trying to sketch theories of SLA in such a way that we may draw teaching implications from them.

Just to remind you, my argument is that language is best seen as a formal system of representations and that we learn it in a different way to the way we learn other things. We learn language implicitly, subconsciously, but as adults learning an L2, our access to the key processors is limited, so we need to supplement this learning with a bit of attention to some “fragile” (non salient, for example) elements. Which gets us nicely back to the main narrative.

Swain’s (1985) famous study of French immersion programmes led to her claim that comprehensible input alone can allow learners to reach high levels of comprehension, but their proficiency and accuracy in production will lag behind, even after years of exposure. Further studies gave more support to this view, and to the opinion that comprehensible input is the necessary but not sufficient condition for proficiency in an L2. Swain’s argument was that we must give more attention to output, but what took greater hold was the view that we need to “notice” formal features of the input.

Schmidt’s Noticing (again)

In Part 4, I discussed Schmidt’s view. Here’s the diagram:

As we saw, Schmidt completely rejects Kashen’s model, and insists that it’s ‘noticing’, not the unconscious workings of the LAD, that drives interlanguage development. I outlined my objections to even the modified 2001 version of Schmidt’s noticing construct in Part 4, so let’s focus on the main one here: the construct doesn’t clearly indicate the roles of conscious and subconscious, or explicit and implicit learning. In the case of children learning their L1, the processing of input is mostly a subconscious affair, whether or not UG has anything to do with it. For those over the age of 16 learning an L2, according to Krashen, it’s also mostly a subconscious process, although even Krashen admits that some conscious hard work at learning helps to speed up the process and to reach a higher level of proficiency. But it’s not clear, at least to me, what Schmidt means by noticing, and to what extent he sees SLA as involving conscious learning. I think that his 2001 paper seems to concede that implicit learning is still the main driver of interlanguage development, and I think that’s what Long, for example, takes Schmidt to mean.

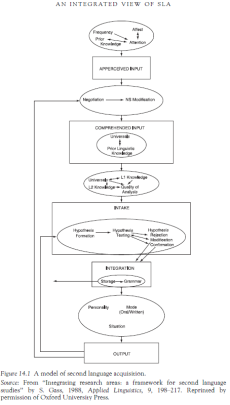

Gass: An Integrated view of SLA

Gass (1997), influenced by Schmidt, offers a more complete picture of what happens to input. She says it goes through stages of apperceived input, comprehended input, intake, integration, and output, thus subdividing Krashen’s comprehensible input into three stages: apperceived input, comprehended input, and intake. I don’t quite get “apperceived input”; Gass says it’s the result of attention, in the similar sense as Tomlin and Villa’s (1994) notion of orientation, and Schmidt says it’s the same as his noticing, which doesn’t help me much. In any case, once the intake has been worked on in working memory, Gass stresses the importance of negotiated interaction during input processing and eventual acquisition. Here, she adopts Long’s highly influential construct of negotiation for meaning which refers to what learners do when there’s a failure in communicative interaction. As a result of this negotiation, learners get more usable input, they give attention (of some sort) to problematic features in the L2, and make mental comparisons between their IL and the L2. Gass says that negotiated interaction enhances the input in three ways:

- it’s made more comprehensible,

- problematic forms that impede comprehension are highlighted and forced to be processed to achieve successful communication.

- through negotiation, learners receive both positive and negative feedback that are juxtaposed immediately to the problematic form, and the close proximity facilitates hypothesis-testing and revision (Doughty, 2001).

Many scholar have commented that these effects should be regarded as a facilitator of learning, not a mechanism for learning, and I have to say that in general I find the Gass model a rather unsatisfactory compilation of bits. Still, it’s part of the story, and it’s certainly a well-considered, thorough attempt to explain how input gets processed.

We still have to look at the theories of Towell and Hawkins, Susanne Carroll, Jan Hulstijn, and then Bates & MacWhinney and Nick Ellis. The models reviewed so far agree on the need for comprehensible input; learners decode enough of the input to make some kind of conceptual representation, which can then be compared with linguistic structures which already form part of the interlanguage. As is so often the case with theories of learning (Darwin comes to mind), it’s the bits that don’t fit, or that can’t be parsed, that cause a “mental jolt in processing”, as Sun (2008) calls it. It’s the incomprehensibility of the input that triggers learning, as I’m sure Schmidt would agree.

REFERENCES

Corder, S. P. (1967). The significance of learners’ errors. IRAL, 5, 161-170.

Faerch, C., & Kasper, G. (1980). Processing and strategies in foreign language learning and communication. The Interlangauge Studies Bulletin—Utrecht, 5, 47-118.

Gass, S. M. (1997). Input, interaction, and the second language learner. Mahwah, NJ: Lawrence Elrbaum.

Hulstijn, J. (2013) Is the Second Language Acquisition discipline disintegrating? Language Teaching, 46, 4 , pp 511 – 517.

Krashen, S. D. (1982). Principles and practice in second language acquisition. Oxford, UK: Pergamon.

Krashen, S. D. (1985). The input hypothesis: Issues and implications. London: Longman.

speech perception, reading, and psycholinguistics. New York: Academic Press.

O’Grady, W. (2912) How Children Learn Language. Cambridge, CUP

Schmidt, R. (2001). Attention. In P. Robinson (Ed.), Cognition and second language instruction (pp. 3-32). Cambridge UK: Cambridge University Press.

Swain, M. (1985). Communicative competence: Some roles of comprehensible input and comprehensible output in its development. In S. M. Gass & C. G. Madden (Eds.), Input in second language acquisition (pp. 235-253). Rowley, MA: Newbury House.

VanPatten, B. (2017). While we’re on the topic…. BVP on Language, Language Acquisition, and Classroom Practice. Alexandria, VA: The American Council on the Teaching of Foreign Languages.

VanPatten, B. (2003). From Input to Output: A Teacher’s Guide to Second Language Acquisition. New York: McGraw-Hill.

VanPatten, B. (1996). Input Processing and Grammar Instruction: Theory and Research. Norwood, NJ: Ablex.

I’ve just come across your blog. I found, even picking up in the middle, your analysis to be helpful. You’ve acquired a new dedicated reader!

Hi Devon,

Welcome! I hope you’ll keep me on my toes. 🙂

“As is so often the case with theories of learning (Darwin comes to mind), it’s the bits that don’t fit, or that can’t be parsed, that cause a “mental jolt in processing”, as Sun (2008) calls it. It’s the incomprehensibility of the input that triggers learning, as I’m sure Schmidt would agree.” Nicely summed up Geoff. Thanks

Pingback: The Enigma of the Interface | What do you think you're doing?