Susanne Carroll

I’ll start by noting Carroll’s objection to Schmidt’s and Gass’s theories. She argues that if input refers to observable sensory stimuli in the environment, then it can’t play any significant role in L2 learning because the stuff of acquisition – phonemes, syllables, morphemes, nouns, verbs, cases, etc. – consists of mental constructs that exist in the mind and not in the external environment. As Gregg has repeatedly said “You can’t notice grammar!” Carroll (2001) says:

The view that input is comprehended speech is mistaken and has arisen from an uncritical examination of the implications of Krashen’s (1985) claims to this effect. …… Comprehending speech is something which happens as a consequence of a successful parse of the speech signal. Before one can successfully parse the L2, one must learn it’s grammatical properties. Krashen got it backwards!”

In Carroll’s theory, learners don’t attend to things in the input as such, they respond to speech-signals by attempting to parse the signals, and failures to do so trigger attention to parts of the signal. Basing herself partly on Fodor’s work, Carroll (2017) argues that we have to make a distinction between types of input.

On the one hand, we need to talk about INPUT-TO-LANGUAGE-PROCESSORS, e.g., bits of the speech signal that are fed into language processors and which will be analysable if the current state of the grammar permits it. On the other hand, we need a distinct notion of INPUT-TO-THE-LANGUAGE-ACQUISITION-MECHANISMS, which will be whatever it is that those mechanisms need to create a novel representation. For most of the learning problems that we are interested in, the input-to-the-language acquisition-mechanisms will not be coming directly from the environment.

The Autonomous Induction Model

Carroll uses Jackendoff’s (1987) modularity model and Holland, Holyoak, Nisbett, and Thagard’s (1986) induction model to build her own Autonomous Induction model. She sees our linguistic faculty as comprised of a chain of representations acting on different levels: the lowest level interacts with physical stimuli, and the highest with conceptual representations. At the lowest level of representation, the integrative processor combines smaller representations into larger units, while the correspondence processor is responsible for moving the representations from one level to the next. Once representations are formed, they are categorized and combined according to UG-based or long-term memory-based rules. During successful parsing, rules are activated in each processor to categorize and combine representations. Failures occur when the rules are inadequate or missing. Consequently, the rule that comes closest to successfully parse the specific unit would be selected and would undergo the most economical and incremental revision. This process is repeated until parsing succeeds or is at least passable at that given level.

So Carroll’s explanation of how stimuli from the environment end up as linguistic knowledge is that two different types of processing are involved: processing for parsing and processing for acquisition. When the parsers fail, the acquisitional mechanisms are triggered (a view, as I’ve already suggested, which aligns with the notion of incomprehensible input).

For my purposes, what’s important in Carroll’s account is that speech signal processing doesn’t involve noticing – it’s a subconconscious process where learners detect, encode and respond to linguistic sounds. Furthermore, Carroll argues that once the representation enters the interlanguage, learners don’t always notice their own processing of segments and the internal organization of their own conceptual representations; and that the processing of forms and meanings are often not noticed.

Caroll sees intake as a subset of stimuli from the environment, while Gass defines intake as a set of processed structures waiting to be incorporated into IL grammar. It seems to me that Carroll provides a better description of input, and she is surely right to say that cognitive comparison of representations (wherever it takes place) is largely automatic and subconconscious. Awareness, Carroll concludes, is something that occurs, if at all, only after the fact; and that’s a conclusion which just about all the theories I’ve looked at come to.

Towell and Hawkins’ Model of SLA

Towell and Hawkins (1994) model begins with UG, which sets the framework within which linguistic forms in the L1 and L2 are related. Learners of an L2 after the age of seven years old have only partial access to UG, namely UG principles; they will transfer parameter settings from their L1, and where such settings conflict with L2 data, they may construct rules to mimic the surface properties of the L2. The second internal source is thus the first language. Learners may transfer a parameter setting, or UG may make possible a kind of mimicking.

The adoption of the “partial access to UG” hypothesis leads Towell and Hawkins to assume that there are two different sorts of knowledge involved in interlanguage development: linguistic competence (derived from UG and L1 transfer) and learned linguistic knowledge (derived from explicit instruction and negative feedback).

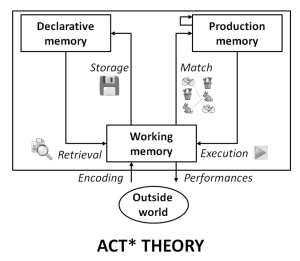

To explain the way in which interlanguage develops when simple triggering of parameters doesn’t happen, Towell and Hawkins use Levelt’s model of language production (1989) to introduce the distinction between procedural (subconscious, automatic) and declarative (conscious) knowledge, and then Anderson’s ACT* (Adaptive Control of Thought) model (1983), to explain how the declarative knowledge gets processed (see below).

The information processing mechanisms condition the way in which input provides data for hypotheses, the way in which hypotheses must be turned into productions for fluent use, and the final output of productions. (Towell and Hawkins, 1994: 248)

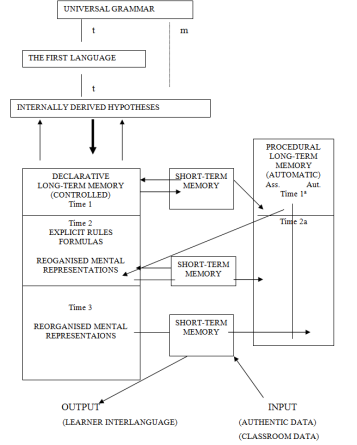

The full model is presented below:

Input and output pass through short-term memory, which determines the information available to long-term memories, and is used to pass information between the two types of long-term memory proposed: the declarative memory and the procedural memory.

Short-term memory consists in that set of nodes activated in memory at the same time and allows certain operations to be performed on relatively small amounts of information for a given time. The processes are either controlled (the subject is required to pay attention to the process while it is happening), or automatic. Automatic processes are inflexible and take a long time to set up. Once processes have been automatised the limited capacity can be used for new tasks.

All knowledge initially goes into declarative memory; the internally derived hypotheses offer substantive suggestions for the core of linguistic knowledge and those parameters common to both L1 and L2. The other areas of language are worked out by the interaction of data with the internally derived hypotheses.

The model suggests four learning routes:

Route one:

confirmation by external data of an internal hypothesis leading to the creation of a production to be stored in procedural memory first in associative form (i.e. under attentional control) and then in autonomous form for rapid use via the short term memory. (Towell and Hawkins, 1994: 250)

Route two:

initial storage of a form-function pair in declarative memory as an unanalysed whole. If it cannot be analysed by the learner’s grammar but can be remembered for use in a given context, it may be shifted to procedural memory at the associative level. It may be re-called into declarative knowledge where it may be re-examined, and if it is now analysable, it may be converted to another level of mental organisation before being passed back to the procedural level. (Towell and Hawkins, 1994: 250-251)

Route three

concerns explicit rules, like verb paradigms, vocabulary lists, lists of prepositions. This knowledge can only be recalled in the form in which it was learned, and can be used to revise and correct output. (Towell and Hawkins, 1994: 251)

Route four

concerns strategies, which facilitate the proceduralisation of mechanisms for faster processing of input and greater fluency. These strategies do not interact with internal hypotheses. (Towell and Hawkins, 1994: 251)

Hypotheses derived from UG either directly or via L1 are available as declarative knowledge, i.e. hypotheses which are tested via controlled processing where learners pay attention to what they are receiving and producing. If the hypotheses are confirmed, Towell and Hawkins say they “can be quickly launched on the staged progression described by Anderson (1983, 1985).”

Discussion

UG is used to explain transfer, staged development and cross-learner systematicity. The UG prevents the learner from entertaining “wild” hypotheses about the L2, and allows the learner to “learn” a series of structures by perceiving that a certain relationship between the L1 and L2 exists. Towell and Hawkins’ “partial access” view of UG and SLA is reflected in their belief that there is a lack of positive evidence available to L2 learners to enable them to reset the parameters already set in the L1, and that “the older you are at first exposure to an L2, the more incomplete your grammar will be”.

I’m not convinced! The part played by declarative knowledge seems particularly odd. How does knowledge of UG principles form part of declarative knowdge? And the ACT model looks to me like an awkward bolt-on. The distinction between declarative and procedural knowledge leaves unanswered the question of the nature of the storage of information in declarative and procedural forms, and there’s no explanation of how the externally-provided data interact with the internally-derived hypotheses.

More generally, this a very complex model which pays scant regard to the Occam’s Razor criterion. There’s a profusion of terms and entities postulated by the theory – principles and parameters, declarative memory and production memory, procedural and declarative knowledge, associative and automatic procedural knowledge, linguistic competence and linguistic knowledge, mimicking, the use of a language module, a conceptualiser, and a formulator – which means that only the accumulation of research results from testing would make a proper evaluation possible. This hasn’t happened.

I’ve outlined it here, first because it was once quite influential; second because it’s another example of an attempt to explain how input leads to L2 knowledge by passing through a series of mental processing routines, and third because it gives me the chance to discuss Anderson’s ACT model.

Anderson’s ACT model

When applied to second language learning, the ACT model suggests that learners are first presented with information about the L2 (declarative knowledge ) and then, via practice, this is converted into unconscious knowledge of how to use the L2 (procedural knowledge). The learner moves from controlled to automatic processing, and through intensive linguistically focused rehearsal, achieves increasingly faster access to, and more fluent control over the L2 (see DeKeyser, 2007, for example).

The fact that nearly everybody successfully learns at least one language as a child without starting with declarative knowledge, and that millions of people learn additional languages without studying them (migrant workers, for example) are good reasons to doubt that learning a language is the same as learning a skill such as driving a car. Furthermore, the phenomenon of L1 transfer doesn’t fit well with a skills based approach, and neither do putative senstive periods (critical periods) for language learning. But the main reason for rejecting such an approach is that it contradicts all the SLA research findings related to interlanguage development which we’ve been examining in this review of SLA theories.

Firstly, as has been made it doesn’t make sense to present grammatical constructions one by one in isolation because most of them are inextricably inter-related. As Long (2015) says:

Producing English sentences with target-like negation, for example, requires control of word order, tense, and auxiliaries, in addition to knowing where the negator is placed. Learners cannot produce even simple utterances like “John didn’t buy the car” accurately without all of those. It is not surprising, therefore, that interlanguage development of individual structures has very rarely been found to be sudden, categorical, or linear, with learners achieving native-like ability with structures one at a time, while making no progress with others. Interlanguage development just does not work like that.

Secondly, as we have seen, research has shown that L2 learners follow their own developmental route, a series of interlocking linguistic systems called “interlanguages”. Myles (2013) states that the findings on the route of interlanguage (IL) development is one of the most well documented findings of SLA research of the past few decades. She asserts that the route is “highly systematic” and that it “remains largely independent of both the learner’s mother tongue and the context of learning (e.g. whether instructed in a classroom or acquired naturally by exposure)”. The claim that instruction can influence the rate but not the route of IL development is probably the most widely accepted claim among SLA scholars today.

Pienemann comments:

Fifteen years later, Anderson appears to have revised his position. He states “With very little and often no deliberate instruction, children by the time they reach age 10 have accomplished implicitly what generations of Ph.D. linguists have not accomplished explicitly. They have internalised all the major rules of a language..” (Anderson, 1995, 364). In other words, Anderson no longer sees language acquisition as an instance of the conversion of declarative into procedural knowledge.

In addition, it is well-documented that procedural knowledge does not have to progress through a declarative phase. In fact, human participants in experiments on non-conscious learning were not only unaware of the rules they applied, they were not even aware that they had acquired any knowledge (Pienemann, 1998: 41).

Next up: emergentism. And that will be the last part of the review.

References

Anderson, J. (1983) The Architecture of Cognition.Cambridge, MA: Harvard University Press

Carroll, S.(2015) Expose and input in bilingual development. Bilingualism, Language and Cognition, 20,1, 16-31.

Carroll, S. (2001) Input and Evidence. Amsterdam: Benjamins.

Krashen, S. (1985) The Input Hypothesis: Issues and Implications. New York: Longman.

Levelt, W. (1989) Speaking: From Intention to Articulation.Cambridge, MA: MIT Press.

Long, M. (2015) SLA and TBLT. Wiley.

Towell, R. and Hawkins, R. (1994) Approaches to second language acquisition. Clevedon: Multilingual Matters.